Advertisement

Recently, Nvidia released significant changes to its AI Enterprise Suite, which is meant for practical use. With the new Version 6.3, Nvidia improves how businesses create, run, and grow AI systems across several platforms. These changes are more than technical fixes—they simplify infrastructure, reduce delays, and support powerful AI models. Whether your company runs on private servers or the cloud, Nvidia’s new tools seek to provide greater performance and smoother implementation.

Stronger connections with Oracle Cloud and NIM Operator 2.0 and support in Dell’s AI Factory highlight Nvidia’s real dedication to artificial intelligence advancement. Through full-stack ecosystem tools and stronger partner cooperation, Nvidia is laying a future-ready basis for business artificial intelligence. Let’s investigate the main developments that can affect your AI approach.

Infrastructure Release 6.3 by Nvidia brings significant system performance enhancement. The most recent R570 data center GPU drivers are contained in this release. These drivers enable improved dependability and support more recent hardware. It also upgrades the container toolkits and DOCA-OFED drivers. Using these technologies helps one apply artificial intelligence across Kubernetes clusters more easily. The NIM Operator 2.0 adds still another significant bit. This operator speeds model loading and scaling.

Microservices can now rapidly respond to new demands. Nvidia streamlined package management and system updates, increasing flow and saving time. With Infra 6.3, Nvidia simplifies the management of artificial intelligence systems in businesses. Every update is designed to meet real-world business needs. Businesses obtain better infrastructure and long-term support. This improvement delivers better AI operations on a private server or a cloud configuration. Nvidia’s new infrastructure tools give developers and IT teams more control, faster performance, and fewer delays.

The inclusion of the NIM Operator into the central software is one of the most significant changes. This utility was optional not too long ago. These days, it is automatically included in Nvidia AI Enterprise. For AI microservices, the NIM Operator 2.0 offers a few advantages. Through sophisticated pre-caching, models load faster. It lowers waiting times and lets artificial intelligence programs react fast. Furthermore, autoscaling is enhanced by the technique. It can thus automatically change the resource use. It comes in handy when demand fluctuates.

The NIM Operator helps inference models be smoothly deployed. Modern developers do not have to manage difficult setups; the system automatically handles it. It fits current cloud systems and Kubernetes clusters nicely. This action by Nvidia aims to streamline corporate artificial intelligence implementation. With this shift, companies may create and oversee smarter artificial intelligence systems. Though little, this modification has a significant effect on output and performance.

Nvidia has now strengthened its relationship with Oracle Cloud Infrastructure (OCI). Nvidia AI Enterprise is now totally supported by OCI. Users of Oracle’s console can so immediately access Nvidia’s whole AI stack. They can implement more than 160 artificial intelligence frameworks and technologies with a few clicks. These cover software kits, pre-built microservices, and APIs. Oracle today also supports the new NIM microservices developed by Nvidia. It lets big artificial intelligence models be quickly and effectively deployed.

OCI customers using Grace or Blackwell GPUs will benefit the most. The integration enhances scalability and performance alike. Additionally, Nvidia and Oracle offer combined support to consumers, speeding up users’ problem-solving. Companies may employ potent Nvidia tools right now without changing platforms. With this release, Nvidia simplifies enterprise artificial intelligence applications in cloud systems. The outcome is a simplified experience with more authority and freedom. Teams working on big artificial intelligence initiatives housed on Oracle’s cloud will gain from this.

Nowadays, Dell’s AI Factory consists mostly of Nvidia AI Enterprise products. Dell PowerEdge servers now come with Nvidia tools pre-installed. Models using Nvidia’s newest architecture include XE9650 and XE9665. It covers Grace CPU capability and Blackwell GPUs. Enterprises gain a ready-made artificial intelligence platform from this integration. Furthermore, Dell has managed services. These cover system monitoring 24/7, patching, and software upgrades. Internal IT staff find less work as a result. The suite also includes LLMOps tools like NeMo and NIM.

These tools handle large language models nicely. Working together, Nvidia and Dell have made installation simple. They aim to assist in quicker artificial intelligence progress. This cooperation provides businesses with a whole hardware and software stack. Users now enjoy performance, security, and faster speed. Deploying significant AI workloads is easier when everything is consolidated. The Dell and Nvidia alliance gives companies real-time artificial intelligence processing power.

Right now, Nvidia is mainly focused on enabling agentic artificial intelligence. It implies artificial intelligence with autonomous decision-making capability. The firm developed tools to assist with logical processes. These allow AI systems to plan tasks and understand complex data. The platform takes advantage of NeMo agents and retrieval models. NIM microservices enable the connection of several AI capabilities. Nvidia also contributed fresh OpenUSD blueprints. These facilitate the development of AI agents functioning across platforms. These tools help businesses create automation tools and smart assistants.

The direction of corporate activities depends on agentic artificial intelligence—the upgrades from Nvidia help build these intricate systems more easily. The tools link seamlessly with compute resources, networking, and storage, increasing deployment efficiency. Nvidia's objective is to provide agentic artificial intelligence to every company. These upgrades give developers all the essential tools they need. From pre-configured hardware support to code libraries, Nvidia today provides a complete platform for AI agents.

The most recent changes from Nvidia make its AI Enterprise Suite simpler and more potent. Faster deployment, improved management, and greater cloud integration are among the benefits of the developments. From basic drivers to logical processes, everything is meant for practical application. Flexibility on the platform comes from partnerships with Oracle, Dell, and others. AI activities are streamlined by tools such as the NIM operator. Companies today have all they need to create contemporary artificial intelligence systems. For clouds, edges, or private data centers, Nvidia provides complete support. These upgrades capture Nvidia’s ambition to dominate enterprise artificial intelligence—making it quicker, smarter, and ready for the future.

Advertisement

Apple joins the bullish AI investment trend with bold moves in AI chips, on-device intelligence, and strategic innovation

Explore top business process modeling techniques with examples to improve workflows, efficiency, and organizational performance

Learn the role of Python comments in writing readable and maintainable code. Understand their importance, types, and best practices in this clear and simplified guide

Discover how AI adoption is evolving in 2025, including critical shifts, business risks, and future growth opportunities.

Discover how Lucidworks’ new AI-powered platform transforms enterprise search with smarter, faster, and more accurate results

Learn everything about file handling in Python with this hands-on guide. Understand how to read and write files in Python through clear, practical methods anyone can follow

Know about 5 powerful AI tools that boost LinkedIn growth, enhance engagement, and help you build a strong professional presence

Discover Nvidia’s latest AI Enterprise Suite updates, featuring faster deployment, cloud support, advanced AI tools, and more

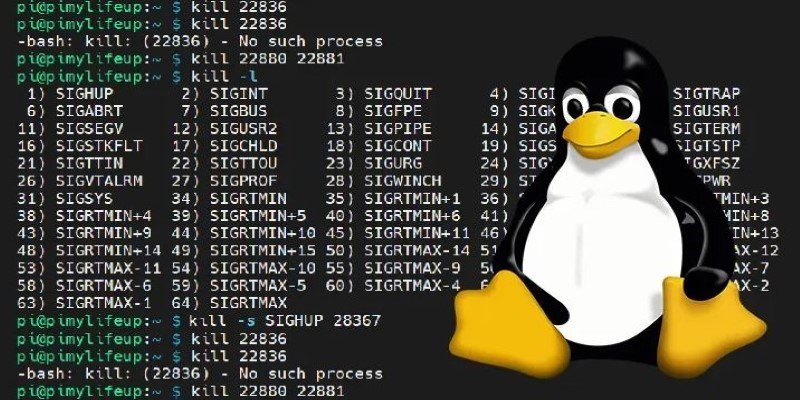

Learn the basics and best practices for updating file permissions in Linux with chmod. Understand numeric and symbolic modes, use cases, and safe command usage

Understand what are the differences between yield and return in Python. Learn how these two Python functions behave, when to use them, and how they impact performance and memory

How to use the with statement in Python to write cleaner, safer code. Understand its role in resource management and how it works with any context manager

How to kill processes in Linux using the kill command. Understand signal types, usage examples, and safe process management techniques on Linux systems