Advertisement

Some images don’t just show things—they say them. Menus, documents, infographics, and signs carry meaning that lives in both the visuals and the words. Reading the text alone doesn’t help if you miss how it fits into the scene. That’s the gap ConTextual fills. It’s a benchmark built to test how well multimodal models combine vision and language in settings where text matters. It isn’t about spotting words—it’s about understanding what they mean in context. ConTextual sets up challenges that force models to reason across both modalities rather than treating them as separate parts.

ConTextual is a benchmark from CMU, Stanford, and Adobe that focuses on scenes where text plays a key role. Unlike earlier benchmarks, it’s not about recognizing objects or running OCR. It asks models to reason across text and layout. Think of it like this: you’re shown a flyer that says “SALE ENDS TODAY” and asked, “What does the image suggest about urgency?” The model must understand placement, font size, and the visual intent of the text—not just the words themselves.

It includes over 7,000 image-question-answer examples spread across five categories: infographics, screenshots, posters, natural scenes, and documents. Each type targets different reasoning challenges. Some questions ask the model to link text with graphics. Others test if the model can track tone or logic. Models that rely only on OCR fail quickly. This benchmark is built to require more—true understanding, not surface-level recognition.

Most multimodal benchmarks let models get by with partial information. ConTextual breaks that pattern. A poster with multiple texts in different colours might include one line that reverses the overall message. The model needs to weigh all the elements to answer correctly. These examples reflect real-world settings, from social media to signage to educational content.

The benchmark has been used to test several vision-language models, including Flamingo, GPT-4V, BLIP-2, OpenFlamingo, and MiniGPT-4. Some models performed reasonably well, but many struggled when forced to connect words to layout or when faced with unfamiliar formats. In particular, models that do well on image captioning or generic Q&A often stumble here. It’s not just about reading—it’s about reasoning.

That’s the value ConTextual brings. Many existing benchmarks allow models to guess based on word frequency or visual hints. ConTextual avoids these shortcuts. It contains adversarial samples where the obvious choice is wrong, encouraging more careful interpretation. For instance, a sarcastic tweet with a matching background image might look sincere unless the model reads it in full.

What’s more, ConTextual includes detailed annotations. Researchers can trace why a model failed. Did it miss a word? Misinterpret a chart? Confuse tone? This makes it useful not just for scoring but for diagnosing model behaviour. It helps model builders identify gaps and design better training approaches.

Most models today treat text extraction as a separate step, using OCR and then feeding that into a language model. This works for simple scenes but fails when layout and visual design carry meaning. In charts, posters, or screenshots, the spatial relationship between text and image can be the key to understanding. ConTextual highlights this limitation.

To get better at these tasks, models need tighter integration between the visual and text streams. Rather than separating them, future models might use joint encoders or attention mechanisms that treat text tokens and image patches together. Some approaches fuse visual and textual features earlier in the model, which seems promising for these tasks.

There’s also a gap in the training data. Most multimodal datasets are built on natural images with captions—not text-heavy images. That limits what current models learn. ConTextual suggests the need for richer training sets with forms, diagrams, and real-world screenshots. These data types teach models to treat layout as part of the message.

Few-shot and zero-shot settings are another angle. Can a general-purpose model handle a new document layout with just a few examples? Current models often can't. Those trained on narrow document types do better but lose flexibility. ConTextual helps expose these tradeoffs by testing a wide range of image types and tasks.

By forcing models to reason across modalities in unfamiliar ways, ConTextual pushes toward systems that are more flexible and grounded. It's not about doing one thing well—it's about making the model understand how vision and language interact in the same space.

Multimodal reasoning in scenes rich with text remains an open challenge. ConTextual shows where the gaps are but also hints at how to close them. Better architecture, better data, and better training objectives are all needed. Models must learn not just to read but to place what they read in context—visually and logically.

The benchmark is compact but well-structured. It gives a clean signal of model performance across varied use cases. Expanding the dataset with similar examples could help drive future research. Another promising direction is integrating scene-aware OCR, where extraction depends on the task instead of being a fixed step.

ConTextual’s main strength is that it reflects real-world needs. Whether it’s reading instructions, interpreting a meme, or navigating a web page, text and layout often work together. Tools that ignore this are limited. Multimodal models that pass this benchmark won’t just be better performers—they’ll be better readers of the world around them.

Models today can detect text and describe images, but that’s not enough. They need to understand how text and visuals work together. ConTextual was built to test this missing link. It pushes multimodal models beyond recognition into interpretation. For developers and researchers, it offers a clear view of where current systems succeed—and where they still fall short. This matters for any tool meant to read and reason with humans. If your model can’t make sense of a busy poster or a screenshot packed with words, it’s not ready for real-world use. ConTextual helps get it there.

Advertisement

Explore top business process modeling techniques with examples to improve workflows, efficiency, and organizational performance

Discover Nvidia’s latest AI Enterprise Suite updates, featuring faster deployment, cloud support, advanced AI tools, and more

Understand what are the differences between yield and return in Python. Learn how these two Python functions behave, when to use them, and how they impact performance and memory

Learn the role of Python comments in writing readable and maintainable code. Understand their importance, types, and best practices in this clear and simplified guide

How the SQL DATEDIFF function helps calculate the gap between two dates. This guide covers syntax, use cases, and system compatibility

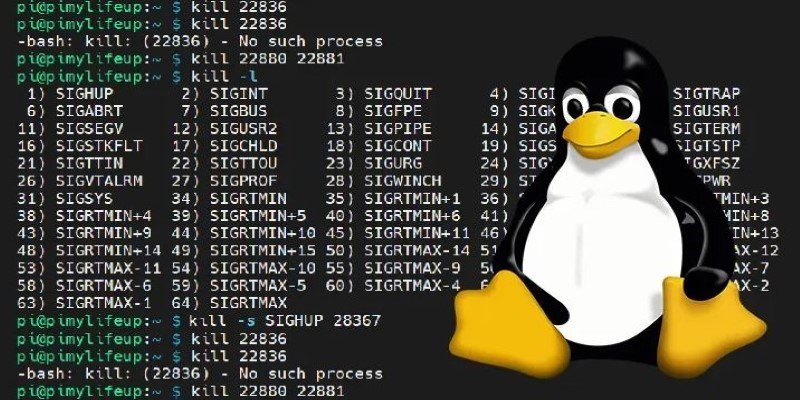

How to kill processes in Linux using the kill command. Understand signal types, usage examples, and safe process management techniques on Linux systems

Dell and Nvidia team up to deliver scalable enterprise generative AI solutions with powerful infrastructure and fast deployment

Microsoft’s new AI model Muse revolutionizes video game creation by generating gameplay and visuals, empowering developers like never before

How to deploy and fine-tune DeepSeek models on AWS using EC2, S3, and Hugging Face tools. This guide walks you through the process of setting up, training, and scaling DeepSeek models efficiently in the cloud

How to run a chatbot on your laptop with Phi-2 on Intel Meteor Lake. This setup offers fast, private, and cloud-free AI assistance without draining your system

Introducing ConTextual: a benchmark that tests how well multimodal models reason over both text and images in complex, real-world scenes like documents, infographics, posters, screenshots, and more

Explore Claude 3.7 Sonnet by Anthropic, an AI model with smart reasoning, fast answers, and safe, helpful tools for daily use