Advertisement

Running large language models like DeepSeek on cloud infrastructure is no longer just for research labs or huge enterprises. With the right setup, it's possible to deploy and fine-tune these models using AWS. It can seem complicated at first—especially if you're not used to handling GPUs or cloud configurations—but breaking the process into smaller, manageable steps makes it doable. This article guides you through the essential steps, from selecting the right instance type to setting up your environment and customizing the model for your specific task.

To get started, the most important decision is your computing environment. DeepSeek models require access to GPUs for both inference and training. AWS offers several options, like EC2 instances in the p3, p4, and g5 families. For moderate-scale fine-tuning, g5.2xlarge or p3.2xlarge are usually enough. For larger models or heavier tasks, more memory and multiple GPUs (such as in p4d instances) may be required.

Begin by creating an EC2 instance with a Deep Learning AMI (DLAMI). These images come with pre-installed libraries, including CUDA, cuDNN, PyTorch, and Hugging Face Transformers. After launching the instance, connect using SSH and ensure your environment is ready. You'll need Python 3.10+, PyTorch (with GPU support), Transformers library, and accelerate from Hugging Face. These tools simplify hardware setup and distributed training.

Storage is another factor to consider. Fine-tuning large models and handling datasets requires fast disk I/O. Use Amazon EBS with provisioned IOPS if your workload is heavy. You can also use Amazon S3 for storing datasets and checkpoints. Attach an S3 bucket using the AWS CLI or Boto3 SDK to seamlessly transfer files in and out of your EC2 instance.

Once your environment is ready, install the DeepSeek model. DeepSeek is compatible with Hugging Face Transformers, which makes it easier to load and use. You can fetch a pre-trained DeepSeek model using a few lines of code:

python

CopyEdit

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "deepseek-ai/deepseek-llm-7b-base"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.float16).cuda()

With the model loaded, you can now run inference directly or integrate it into your application. For real-time or batch inference, you can wrap the model into an API using frameworks like FastAPI or Flask and expose it through AWS API Gateway or an EC2 public IP.

If you're planning to serve it at scale, consider using Amazon SageMaker or ECS (Elastic Container Service). SageMaker handles much of the heavy lifting with container management and autoscaling, though it may cost more depending on your use. For lighter and more direct control, ECS with GPU-compatible instances gives more flexibility.

Inference-only setups are simpler. But to fine-tune, the next layer involves preparing your training loop, dataset, and optimization strategy.

Fine-tuning allows you to adapt DeepSeek to a specific task or domain—like customer support chat, summarization, or technical documentation. Start by defining your dataset. It can be a collection of text files, a JSONL file, or a dataset hosted on Hugging Face Hub. You’ll want to clean and tokenize your text using the same tokenizer used during pretraining:

python

CopyEdit

from datasets import load_dataset

dataset = load_dataset("your_dataset_path_or_name")

def tokenize(example):

return tokenizer(example["text"], truncation=True, padding="max_length", max_length=512)

tokenized_dataset = dataset.map(tokenize, batched=True)

Next, set up your training configuration. Hugging Face's Trainer API simplifies this process; for more control, use Accelerate or LoRA (Low-Rank Adaptation) with PEFT (Parameter-Efficient Fine-Tuning). These approaches reduce memory usage by updating only a small part of the model.

python

CopyEdit

from peft import LoraConfig, get_peft_model, TaskType

config = LoraConfig(

r=8,

lora_alpha=32,

target_modules=["q_proj", "v_proj"],

lora_dropout=0.05,

bias="none",

task_type=TaskType.CAUSAL_LM

)

model = get_peft_model(model, config)

Set up your training arguments with batch size, number of epochs, learning rate, and logging steps. Then, run training using Trainer or Accelerate. You'll need to periodically save checkpoints to avoid losing progress. Ensure that you test the model on validation samples and use log loss metrics to monitor its performance.

After training is complete, save the model and push it to your private Hugging Face model hub or store it in S3. This way, you can easily reload it later or deploy it in a containerized setup.

Once fine-tuned, you'll likely want to deploy the model for production. There are multiple ways to serve DeepSeek models in AWS. For minimal latency and high reliability, SageMaker is suitable. It provides model versioning, endpoint monitoring, and autoscaling. But it’s more expensive and opinionated.

If you need more control or want to reduce costs, consider using Docker with an inference API and deploying it to an EC2 instance behind a load balancer. Your Docker container can include the fine-tuned model and serve requests using FastAPI, TorchServe, or even a custom Python server.

In a production setting, use CloudWatch for monitoring performance and Lambda functions for lightweight automation tasks, such as auto-shutdown of idle instances or notifications when GPU usage spikes. For secure access, use IAM roles and policies to control permissions for S3, EC2, and other services your model needs.

Don’t forget cost management. Large models can quickly incur high usage fees. Use spot instances when possible, automate instance shutdowns during idle times, and monitor your GPU utilization to avoid over-provisioning.

Running DeepSeek models on AWS doesn't need a research lab or a huge budget. With the right setup, you can get them running in a few hours. The key is knowing what each AWS component does, setting up your environment properly, and being realistic about computing and storage. Fine-tuning gives you the flexibility to adapt the model without starting from scratch. Once you pass the initial setup, scaling and managing get easier with the right tools. Whether it's a chatbot or a summarizer, DeepSeek and AWS together give you the control and speed to build useful applications.

Advertisement

Learn how to track real-time AI ROI, measure performance instantly, save costs, and make smarter business decisions every day

Explore how Microsoft’s KOSMOS-2 blends language and visual inputs to create smarter, more grounded AI responses. It’s not just reading text—it’s interpreting images too

Dell and Nvidia team up to deliver scalable enterprise generative AI solutions with powerful infrastructure and fast deployment

Learn everything about file handling in Python with this hands-on guide. Understand how to read and write files in Python through clear, practical methods anyone can follow

Explore Claude 3.7 Sonnet by Anthropic, an AI model with smart reasoning, fast answers, and safe, helpful tools for daily use

Apple joins the bullish AI investment trend with bold moves in AI chips, on-device intelligence, and strategic innovation

Master data preparation with Power Query in Power BI. Learn how to clean, transform, and combine datasets using simple steps that streamline your reporting process

How Introducing the Chatbot Guardrails Arena helps test and compare AI chatbot behavior across models with safety, tone, and policy checks in an open, community-driven environment

Automation Anywhere boosts RPA with generative AI, offering intelligent automation tools for smarter and faster workflows

How llamafiles simplify LLM execution by offering a self-contained executable that eliminates setup hassles, supports local use, and works across platforms

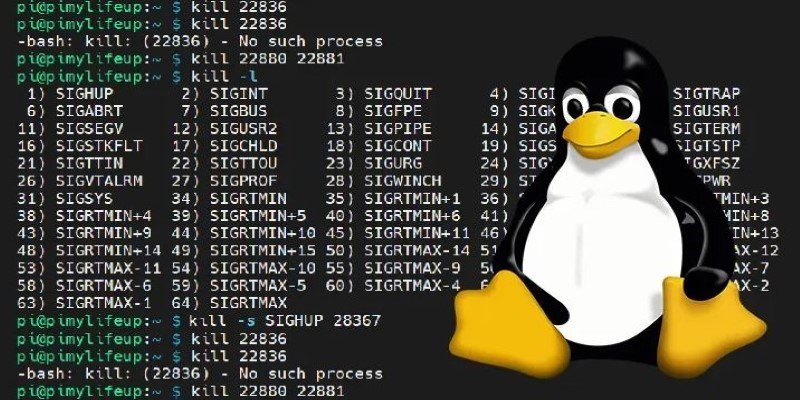

How to kill processes in Linux using the kill command. Understand signal types, usage examples, and safe process management techniques on Linux systems

Microsoft’s new AI model Muse revolutionizes video game creation by generating gameplay and visuals, empowering developers like never before