Advertisement

Sometimes, the simplest tasks are the ones we need most often. Let's say you've got a folder full of files—maybe spreadsheets, logs, or data dumps—and all you want is a plain list of what's in there. No fluff, no UI, just file names you can work with in Python. The good news is Python gives you more than one way to do that, from basic to more flexible options.

Whether writing automation, cleanup scripts, or a data pipeline, being able to quickly get the list of files in a directory can save you time. In this guide, you'll find eight practical ways to get that list, each explained clearly and simply.

The os module has been in Python forever, and os.listdir() is the quickest way to get everything inside a directory. It returns all items—files and folders—without distinguishing between the two.

import os

files = os.listdir('/path/to/folder')

print(files)

This gives you a list of names. If you only want files, you’ll need to filter manually.

only_files = [f for f in os.listdir('/path/to/folder') if os.path.isfile(os.path.join('/path/to/folder', f))]

print(only_files)

It’s fast but doesn’t return full paths unless you build them yourself.

os.scandir() is more efficient than os.listdir() for large folders. It returns an iterator of DirEntry objects, which contain both the name and file type info.

import os

with os.scandir('/path/to/folder') as entries:

files = [entry.name for entry in entries if entry.is_file()]

print(files)

You can also grab full paths:

with os.scandir('/path/to/folder') as entries:

full_paths = [entry.path for entry in entries if entry.is_file()]

print(full_paths)

This is cleaner than os.listdir() if you're checking file types or need full paths.

os.walk() is great when you want to list all files in a directory and its subdirectories. It’s a generator that yields a 3-tuple: the current path, directories inside it, and files.

import os

for root, dirs, files in os.walk('/path/to/folder'):

for file in files:

print(os.path.join(root, file))

It's built for deep folder trees. You get every file, no matter how deeply nested.

Using glob.glob()

If you like wildcards, glob is your friend. It’s good for pattern matching, like listing only .txt or .csv files.

import glob

txt_files = glob.glob('/path/to/folder/*.txt')

print(txt_files)

It returns full paths, not just file names. You can also go recursive with ** and recursive=True.

txt_files_recursive = glob.glob('/path/to/folder/**/*.txt', recursive=True)

print(txt_files_recursive)

Perfect for file filtering by extension.

Python 3.4+ introduced pathlib, a more modern, object-based way to work with files and paths. iterdir() lists all items in a directory.

from pathlib import Path

files = [f for f in Path('/path/to/folder').iterdir() if f.is_file()]

print(files)

The output is a list of Path objects, not strings, which gives you extra power. For example:

for f in files:

print(f.name) # Just the name

print(f.suffix) # File extension

print(f.stem) # Name without extension

It’s cleaner and more readable than os.

Path.glob() works like glob.glob() but keeps everything in the pathlib style.

from pathlib import Path

txt_files = list(Path('/path/to/folder').glob('*.txt'))

print(txt_files)

And if you want to go into subfolders:

recursive_txt = list(Path('/path/to/folder').rglob('*.txt'))

print(recursive_txt)

rglob() is just like glob('**/*.ext') but easier to read.

If you need basic pattern matching but don’t want to bring in glob, use fnmatch.

import os

import fnmatch

all_files = os.listdir('/path/to/folder')

pattern = '*.csv'

csv_files = [f for f in all_files if fnmatch.fnmatch(f, pattern)]

print(csv_files)

You’ll need to build full paths yourself if needed. It’s simple, lightweight, and good for custom patterns.

Sometimes, you already have a list of files but want to filter by extension. os.path.splitext() splits the file name into name and extension.

import os

folder = '/path/to/folder'

files = [f for f in os.listdir(folder) if os.path.isfile(os.path.join(folder, f))]

txt_files = [f for f in files if os.path.splitext(f)[1] == '.txt']

print(txt_files)

It doesn’t list files by itself, but works well as a filter once you’ve listed the items.

Sometimes, you want to use the system's ls or dir command and capture its output in Python. That's where the subprocess comes in.

import subprocess

result = subprocess.run(['ls', '/path/to/folder'], stdout=subprocess.PIPE, text=True)

files = result.stdout.strip().split('\n')

print(files)

On Windows, swap ls for dir and set shell=True:

result = subprocess.run('dir /b', cwd='C:\\path\\to\\folder', stdout=subprocess.PIPE, text=True, shell=True)

files = result.stdout.strip().split('\n')

print(files)

It’s not the cleanest method, and it depends on the OS, but useful when scripting around system tools.

If your “directory” is a ZIP file, you can treat it like a folder and list its contents.

import zipfile

with zipfile.ZipFile('archive.zip', 'r') as zip:

file_list = zip.namelist()

print(file_list)

This doesn't work on regular folders, but it's handy if you're processing compressed files and want to see what’s inside without extracting them first.

If you're working with files bundled inside a Python package (like templates, config files, or data), importlib.resources can list those without needing full paths.

import importlib.resources

files = importlib.resources.files('your_package').iterdir()

file_list = [f.name for f in files if f.is_file()]

print(file_list)

Replace 'your_package' with the actual module or package name.

This method is useful when you're distributing files with your code and want to read or list them safely across different environments without relying on absolute paths. It's especially helpful for libraries or tools that include internal file assets.

Python offers plenty of straightforward ways to list files in a directory, depending on your needs. If you're after speed and simplicity, os.listdir() or pathlib.iterdir() are easy to reach for. For pattern-based filtering, glob, Path.glob(), or fnmatch give you flexible control. Need recursion? Go with os.walk() or Path.rglob(). You can even pull in shell commands with subprocess or inspect ZIP files with zipfile. Each method has its own flavor—some are cleaner, others are more powerful—but they all get the job done. Once you know what kind of list you need, picking the right method is just common sense.

Advertisement

Learn how to track real-time AI ROI, measure performance instantly, save costs, and make smarter business decisions every day

How Introducing the Chatbot Guardrails Arena helps test and compare AI chatbot behavior across models with safety, tone, and policy checks in an open, community-driven environment

Learn the basics and best practices for updating file permissions in Linux with chmod. Understand numeric and symbolic modes, use cases, and safe command usage

How llamafiles simplify LLM execution by offering a self-contained executable that eliminates setup hassles, supports local use, and works across platforms

Explore Claude 3.7 Sonnet by Anthropic, an AI model with smart reasoning, fast answers, and safe, helpful tools for daily use

Learn everything about file handling in Python with this hands-on guide. Understand how to read and write files in Python through clear, practical methods anyone can follow

Explore CodeGemma, Google's latest AI model designed for developers. This open-source tool brings flexibility, speed, and accuracy to coding tasks using advanced code LLMs

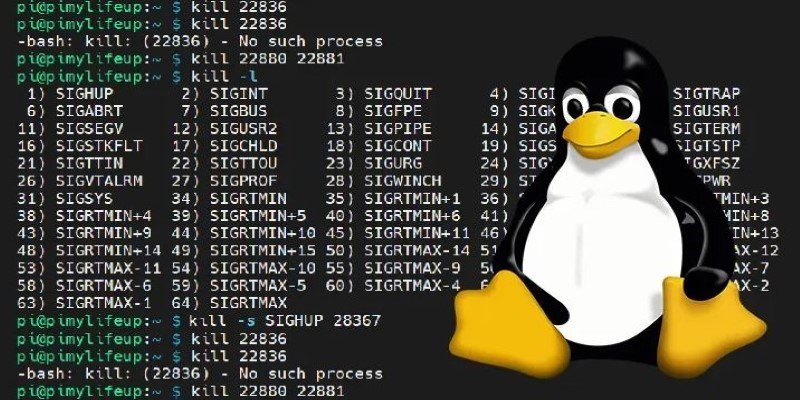

How to kill processes in Linux using the kill command. Understand signal types, usage examples, and safe process management techniques on Linux systems

Discover how Lucidworks’ new AI-powered platform transforms enterprise search with smarter, faster, and more accurate results

How to deploy and fine-tune DeepSeek models on AWS using EC2, S3, and Hugging Face tools. This guide walks you through the process of setting up, training, and scaling DeepSeek models efficiently in the cloud

Apple joins the bullish AI investment trend with bold moves in AI chips, on-device intelligence, and strategic innovation

Know about 5 powerful AI tools that boost LinkedIn growth, enhance engagement, and help you build a strong professional presence